General Purpose Computation

What is the meaning of “general purpose computation”? According to a Google search, general purpose computing is: “A general–purpose computer is one that, given the appropriate application and required time, should be able to perform most common computing tasks.”

What is the meaning of “general purpose computation”? According to a Google search, general purpose computing is: “A general–purpose computer is one that, given the appropriate application and required time, should be able to perform most common computing tasks.”

Google doesn’t provide a definition to general purpose computation, so the definition isn’t precisely correct. At MaiTRIX, we’ve defined general purpose computation in another way: “General purpose computation is digital arithmetic using a finite numeric representation which comprises a fractional and a whole portion and which performs arithmetic operations and provides arithmetic results that are approximately equal to the exact results of the real number system.”

In our definition, emphasis is placed on the use of a number system that employs both a whole number part and a fractional part. If we don’t consider this, then we have other alternatives, such as integer arithmetic, rational number arithmetic, etc. But integer arithmetic alone cannot provide for general purpose computation. Manipulation of rational quantities can be performed for general purpose computation, but this method leads to infinite representations and is not accepted as a concise or efficient method of machine computation.

A rather remarkable yet un-appreciated fact about general purpose computation is that its’ existence is essentially wholly dependent on either fixed-point or floating-point arithmetic. In most all cases of machine computation, the underlying number system is binary. The binary number system is inherently an unsigned integer number system; however, the use of two’s complement and a fixed binary fraction point transforms this simple integer binary system into a powerful general-purpose “fractional” number system. The case is similar with floating-point, i.e., a basic unsigned binary system is transformed into a fractional number system which comprises a fractional value, an exponent and a sign flag. Interestingly, the integer portion of the floating-point format is the most significant bits of the fractional value when the exponent value “shifts” these bits left of the fraction point.

Floating-point hardware

The floating-point number format and the libraries and hardware units that implement floating-point arithmetic deserve much attention when it comes to understanding general purpose computation. In the early days of computing, there were heated debates regarding whether to use floating-point or fixed-point arithmetic. John von Neuman advocated for the use of fixed-point arithmetic in his IAS computer; however, he may have been biased by the difficulty of implementing floating point arithmetic using the vacuum tube (valve) technology of the time.

Others were not so persuaded, like Conrad Zuse, architect of the Z3 computer; he was first to use a binary floating-point representation in a computer. Zuse also created the Z4, the first commercial machine to use floating point hardware designed in 1942-1945. A decade later, several early computer companies offered floating point on their machines; in those days the existence of floating-point hardware characterized a machine as a scientific computer, however, by the early 1980’s Intel began offering floating point hardware as an optional co-processor to its 80×86 CPUs. In 1989 Intel introduced its’ i486 series processor that included floating-point hardware as a standard feature. Floating point hardware becomes a standard feature in all high-end CPU’s thereafter.

For most general-purpose applications, floating point computation is desirable because it relieves the programmer from having to worry about arithmetic ranges and related issues (for the most part). Floating-point hardware also handles important arithmetic exceptions, such as overflow and underflow, and these exceptions are often integrated into a computers OS. The floating-point number system can and does perform amazing feats. But floating-point is not a singular solution in that many variations of floating-point number systems exist.

The many variations of floating-point hardware created problems before standards such as IEEE-754 emerged and was ultimately adopted by all manufacturers. Before this standardization, floating point arithmetic was viewed by some as having seemingly non-deterministic behavior because one computer would provide results that disagreed with another computer! In the early days of computing, these discrepancies between different implementations of floating-point were poorly understood; this in turn made porting software and verifying programs very difficult.

Fixed-point binary libraries exist, but hardware support for fixed-point arithmetic in modern computers is dismal to say the least. Fixed-point hardware is found in some early DSP’s for example. However, it is commonly understood that fixed-point arithmetic, if required, can be implemented using software in most cases. But critical support, such as print specifiers, input and output methods, and overflow detection are sorely lacking for fixed-point arithmetic in most all computer languages and operating systems. Instead, these languages and operating systems provide such support for floating point only.

Near total reliance on standardized floating-point arithmetic has its benefits, but also has its hazards. Today, new demands are being placed on arithmetic computation. For example, artificial intelligence algorithms, such as convolutional neural networks, requires much more computation than a single floating-point unit can deliver. But more importantly, the floating-point unit, being a great general-purpose unit, is not efficient for AI because it is not efficient in performing accumulation (on non-normalized values), the backbone of AI.

The future of computation will require more dedicated arithmetic units. Therefore, new types of hardware computation are required to drive the staggering amount of processing in the future. The spirit and the genius of all who designed the floating-point unit is required once again!

Brief story of RNS number system in computer design

In the late 1950’s, several attempts were made to design a computer using an “unconventional” number system. Some of these efforts involved using a newly described number system called “residue numbers” (in the USA) or “rest classes” (in East Europe and Russia.) In fact, the earliest publication of residue number-based computer systems appears to be M. Valach in 1955 of Czechoslovakia (which was subsequently translated to English by the US air force.) Suggestion of the use of residue numbers in computers also traces back to a letter sent by Antonin Svoboda of Czechoslovakia in 1956 to M.A. Lavrentyev of Russia describing a new method of machine computation using modular arithmetic.

In 1957, several Russian scientists and computer designers, including I.Y. Akushskiy and D.I. Yuditsky, formed the first design team in the USSR to develop a computer using residue number arithmetic. This first attempt may have been in response to news that similar research was being conducted in the USA. However, the project was a failure; available literature suggests not all researchers were on board, but also the residue number system, while carry free, presents many obstacles for machine computation. However, I.Y. Akushskiy was impressed with the idea of using residue numbers in computers, as this became his study for the remainder of his life.

In the USA, the first work on residue numbers appeared concurrently yet independently in work by H.L. Garner in 1958 (using residue codes in error correction) and in 1958 by the noted professor Howard Aiken of Harvard University. In 1959, H.L Garner published the article entitled “The Residue Number System”, which characterized the system and operations on residue classes as a new number system called the residue number system (RNS). Several others developed work in RNS as did several research companies, most entities apparently contracted by the US Air Force or Naval research organizations.

Small groups of scientists and researchers in the USA, Russia, Czechoslovakia, Japan and other countries conducted research into RNS from about 1960 to 1967. This was a period where RNS continued to be explored as a real potential solution for machine arithmetic, if not a total solution then perhaps in part. As far as an actual RNS computer system built in the USA to use residue numbers, one project was contracted by the US Air Force to the Radio Corporation of America to construct an RNS computer; this machine was a prototype and was used to generate a report of the pros and cons of RNS arithmetic. Apparently, the report was not persuasive as the project did not lead to any other RNS computer contracts in the US as far as available literature confirms. Finally, progress was made in Russia with the development of a missile control computer using RNS arithmetic developed by D.I. Juditsky called the T-340A in 1963. A more improved control computer developed by D.I. Juditsky was successfully deployed in 1966 and designated K-340A. Some of these early Russian computers and their related structure saw use as late as 2006!

Despite some limited success of RNS based computers (primarily in Russia), the commercial computer world turned away from RNS based computers as the age of micro-electronics and the IC revolution came into fruition by the early 1970’s. The micro-electronics revolution quickly evolved into the micro-computer revolution which helped solve many of the pressing issues of building complex multipliers requiring thousands of transistors; hence the advantages to supporting an RNS based computer system diminished substantially. However, before the end of the 1960’s, two researchers Nicholas S. Szabo and Richard I. Tanaka gathered and compiled much data and information on the construction of computers using RNS in order to preserve this unique period in computer history in a book entitled “Residue Arithmetic and its Applications to Computer Technology”. Many other important papers have been published since and thus the subject area of RNS based arithmetic circuits has expanded greatly.

Even still, the subject area of published RNS research is not unified in any way, and thus no real progress or innovations from the RNS field have made it into modern computer systems in a substantial way. In fact, many researchers believe that general purpose computation using RNS is not possible, or at least not feasible. Some of the common problems cited is the difficulty in RNS to binary conversion, RNS digit base-extension, and RNS division, to name a few troublesome operations. Another major obstacle is the perception that RNS is an “integer only” number system, which given this view represents a severe limitation for computation of most problems of interest.

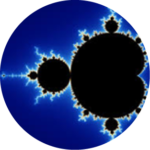

However, in the summer of 2013, Digital System Research, Inc (DSR) accelerated the design and development of a second generation RNS ALU called Rez-9. Daniel Anderson, a graduate student at UNLV, joined the team and was tasked with implementing instruction execution and control circuitry previously modeled and implemented in software by Olsen. Working together, Olsen and Anderson completed a hardware prototype of the Rez-9 in December of 2014. One day before the presentation of the Rez-9 as part of Anderson’s thesis, the Rez-9 successfully executed a Mandelbrot plotting operation, which demonstrated the first sustained, iterative, fractional RNS processing in hardware. (software simulations of RNS Mandelbrot calculations had been developed several years earlier by Olsen). The next day, the Rez-9 prototype was publicly demonstrated for the first time by Daniel Anderson during his thesis presentation at University of Nevada, Las Vegas (UNLV).

Maitrix continues to pioneer solutions to general purpose processing using RNS, and has shown that RNS is not an integer only number system! Moreover, Maitrix has pioneered its digital RNS solutions using a coherent strategy, which means that each RNS module works with each other module in a seamless fashion. The result is that RNS has been re-awoken, and that it’s true benefits and power is now being re-discovered! The result is a highly efficient arithmetic and computational system for massive product summations, a key operation in AI and CNN algorithms. Moreover, many other features are being brought forward, including higher speed, higher accuracy, and true error correcting capabilities. Maitrix has endeavored to bring this massive effort forward to the technology marketplace. Even still, acceptance of a non-binary number system is today limited at best, and this and other obstacles are currently the focus of Maitrix researchers.

Today, Maitrix has unveiled the first working general-purpose RNS based co-processor, the first operational RNS based systolic matrix multiplier, and the first residue based arbitrary precision RNS library. Maitrix has solved the long-standing problem of direct error detection and correction of general purpose arithmetic. Maitrix is also working hard to publish peer reviewed papers and make its research freely available to all. Moreover, Maitrix has completed the most aggressive and substantial base of tested RNS circuit modules ever all written in Verilog. These circuit modules can be easily used by professional developers to complete working circuits with performance metrics not attainable using binary arithmetic.

Screenshot of General Purpose RNS computation, called “Modular Computation”, is first performed in hardware using the Rez-9 processor, and first demonstrated December 2014, Daniel Anderson’s Thesis at University of Nevada, Las Vegas (UNLV).